So, I’ve been busy working with a few updates to the code for Deep DX (read more if you don’t know what I’m referring to) and I also created an area on this site where I will start to put up fresh sounds every now and then.

What’s New?

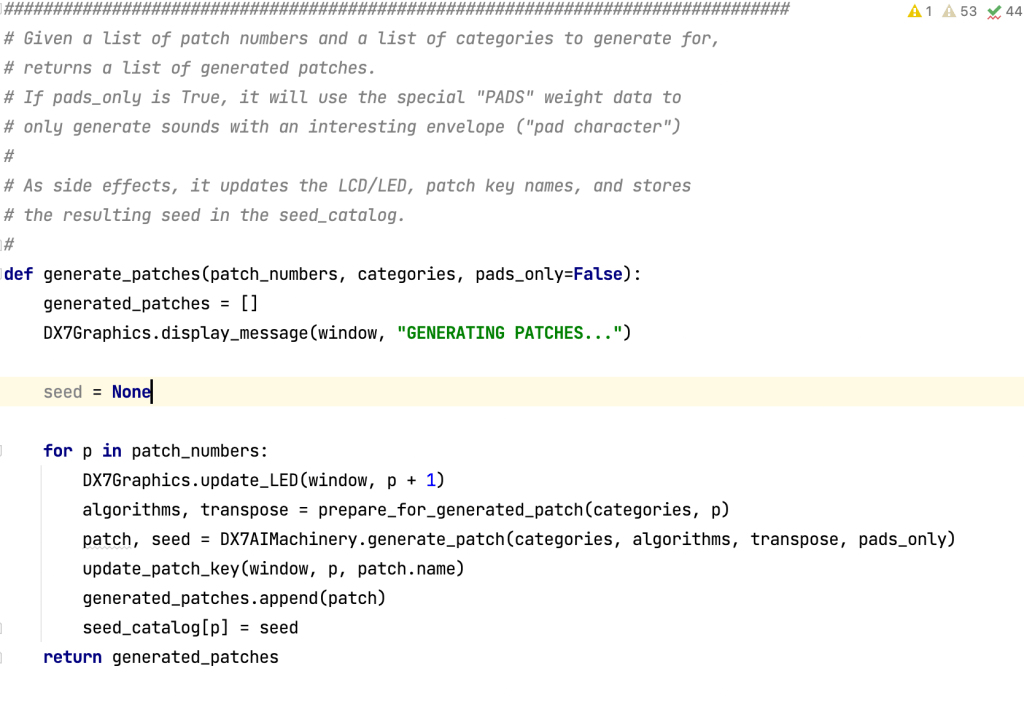

Since my last post, the opportunity to include fixed frequencies for operators as a part of training data has been added. Previously, I converted any patch with operators locked to a fixed frequency to “ratio”, meaning they would track the keyboard, as an effort to reduce the number of variables (a k a “things that can go wrong”) but in the end, the potential increase in potential sounds, and the increased potential to get really interesting sounds was too tempting.

Surprisingly, quite a few of the sounds I use as training data contained operators with fixed frequencies – close to 30% with my latest set – so it would make sense to include it as well.

Scanned 31066 patches in training data

9178 patches with one or more fixed operators (29%)After training and a few evenings of test runs, it turned out quite close to my expectations:

- Yes, the sound pool became wider and included more interesting sounds, and

- Yes, there are now more duds1 than previously.

In some cases, it seems like the model is not fully aware of the connection between frequency ratios and operator mode. Usually, one or two sounds per bank will not sound right, but flipping the switch (from “fixed” to “ratio” or the other way around) will immediately fix the sound. This indicates that the connection between frequencies and oscillator mode has to be emphasized in the training data somehow. This will be put on the backlog for now, and instead I wrote some code that heuristically tries to “fix” the sound in the described manner if it doesn’t seem right.

Quite a few of the patches now use this technique to generate a “click” in the attack portion of the sound, something I was happy to note, seeing that this was a common technique when creating certain type of patches back in the day.

1 The scientific term for “patches that sound awful”

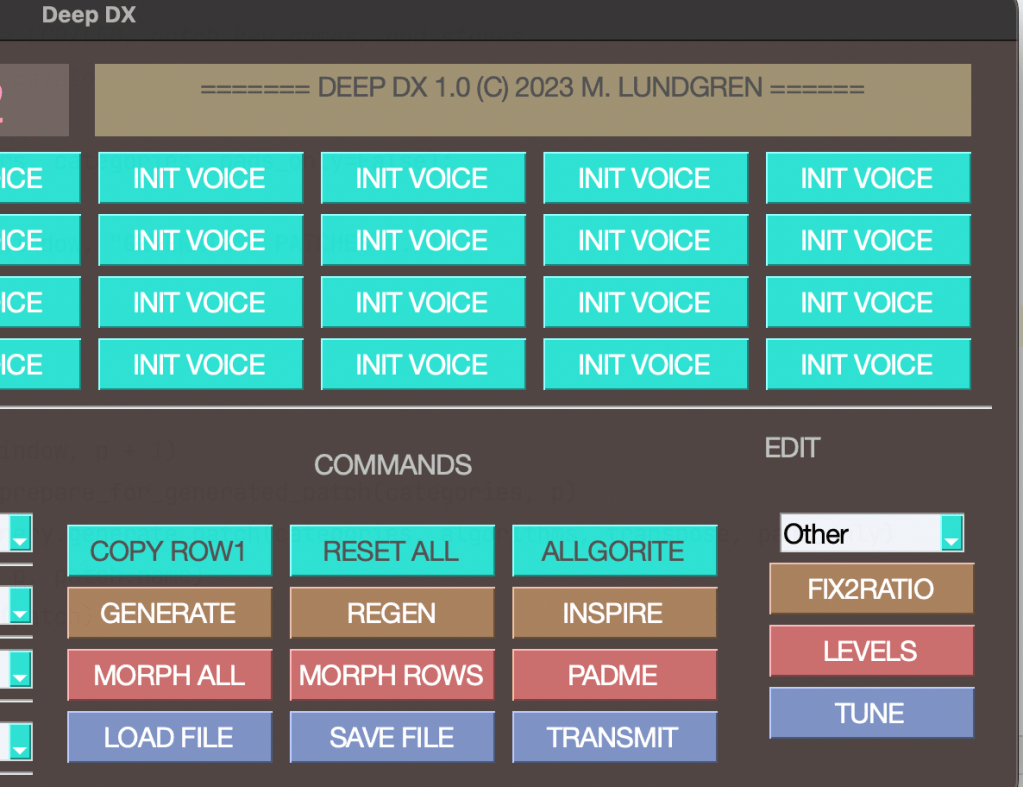

Pads for Days, Algorithms for All

I also couldn’t resist the temptation to train a specific model for pads. I used my previous code for selecting training data to specifically look for patches with “interesting envelopes” and add them to a specific training data set. What makes an envelope “interesting”, one may ask? Well, in this case, it was to be any sound that had at least one operator with a long, sweeping envelope (typically: low rates and in particular a slow attack).

By pressing the proper button (guess which?) a full bank of 32 “pad” sounds are generated. To my delight, it actually works, and the fruits of my labour can be toyed with by downloading the appropriate sound banks and installing them into your DX7 or compatible instrument.

Another thing I added was the ability to generate specifically sounds for a particular algorithm, let’s say a full bank of sounds suitable for algorithm 1. To increase the amount of training data, I grouped similar algorithms together (so in this example, it would actually generate 32 sounds for algorithm 2, simply because 1 and 2 yield similar results, and algorithm 2 is far more prevalent in the training data).

This can also be sampled by testing the different “algorithm banks” i made available for download.

Sounds

As mentioned, a new area has been created on the site, where I will gradually upload and categorize sounds that are free to download and use. I hope to expand the universe of DX7 sounds quite a bit over time, and if you find any sounds useful, I’d be happy to hear about it! Also, if you have any ideas for sounds or other expansions, let me know.

These banks have been curated, that is, a few of the patches have been tweaked manually to fix the problem described above with frequency problems. Usually, it boils down to switching a single operator mode, and it concerns at most a couple of patches per bank. The banks themselves have not been curated into “best of” collections – they contain exactly the patches as generated by the system.

Names for the patches are randomized (from a dictionary that hopefully contains no nasty words). In case you find the names weird or not really descriptive, now you know why! Perhaps “DX7 Patch to Descriptive Name” could be a future AI model to add to the backlog…

With summer vacation around the corner, I will have more time to continue the series of articles on how this was done, and how you can try it out for yourself – in the meantime, feel free to browse the sounds here:

Hi,

This is great stuff. Really nice read and also great sounds.

I had some fantasies about this as well after seeing the ‘This cartridge does not exist’ .

I was hoping I would be able to make my own dataset and train it.

Do you have any plans to release the sourcecode on github? Is it all python based?

I got a TX7 and a TX816.

Yesterday I fixed the last module with a new mpu so all 8 modules are working.

I love the sound of the DX7 MK1.

AFX twin used midimutant which could listen to a sound via a mic input and would then create something similar for the DX7.

I also found this editor: https://github.com/eclab/edisyn

It has some machine learning models for the DX7.

LikeLike

Hi, and thank you for your kind comments! I’m going to go over the details of the implementation in upcoming posts, but it is my intention to eventually make the code available, either as source code, or a standalone app. There’s some refactoring and cleanup to be made before that, though 🙂 It’s entirely written in Python at this stage.

I’m slightly envious of your TX816 – they are hard to come by and I hope to have one in my setup at some point. For now, the Kodamo EssenceFM I borrowed serves a similar purpose as the DX7 import actually works quite ok. There’s a function in my code that makes it possible to generate slight variations of a sound, for use with multiple units playing simultaneously. I’ve used it when having my TX7’s playing in unison, but I realize now that it would be interesting to generate banks with 8 different variations of each sound, for the TX816.

The app used by AFX was also an inspiration to me, although I seem to remember it using genetic algorithms rather than neural nets?

LikeLike

[…] Deep DX: Update and New Sounds […]

LikeLike